Category: General

-

Back in the Blog Game

Dear Readers, Happy New Year 2024! As we step into a year full of potential, I find myself reflecting on the past and looking forward to new beginnings. You might recall that my last post was some time ago. The intervening years have been a blend of personal milestones and professional endeavors. Raising three amazing…

-

Why you should not use JustCloud for your backups!

Today I wanted to share with you my experience using the services of JustCloud to backup my personal file. I started looking for a cloud backup solution about 2 years ago, since I had accumulated a considerable amount of personal data I did not want to lose and as I knew that personal backups such as external…

-

The early days of Quantitative Finance

A lot of people start studying Quantitative Finance because they see it as a great way to earn money. It seems indeed reasonable that somebody with great mathematical skills would be looking for opportunities allowing him to make a profit — sometimes at the expense of others. I can still remember like yesterday seeing a…

-

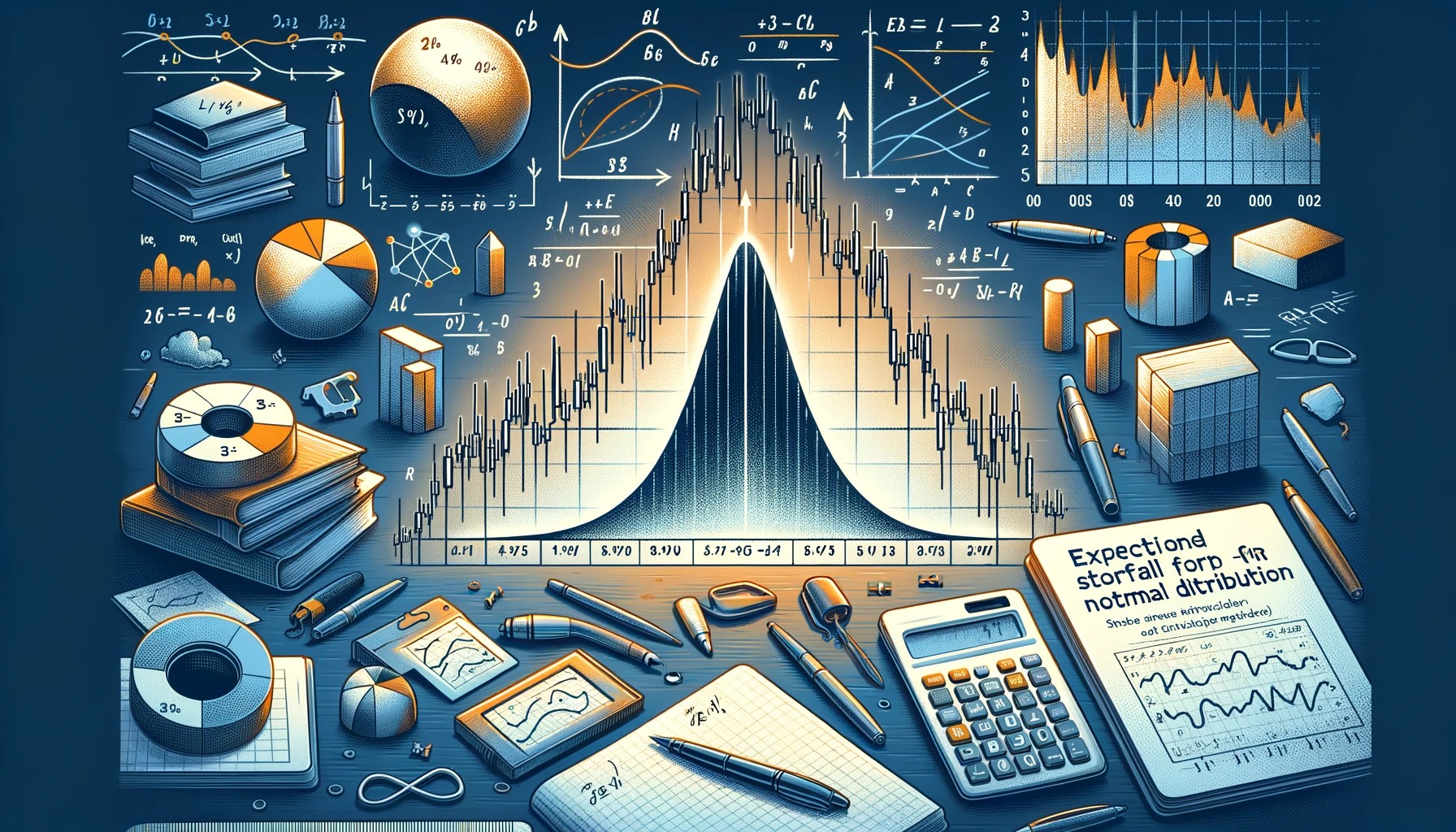

Expected Shortfall closed-form for Normal distribution

Today, I would like to share a little exercise I did to compute the Expected Shortfall of a normal variable. For those of you who are not familiar with this risk measure, it evaluates the average of the $(1-\alpha)$-worst outcomes of a probability distribution (example and formal definition follow). If you have enough data, the…

-

New challenges in Hong Kong!

Hello everyone, Since arriving in Hong Kong two years ago, I haven’t had too many chances to contribute to this blog. There are multiple reasons to this. First of all, my work at Noble Group was taking me a considerable amount of time and energy. It was my first experience in the physical commodity market, and…